- 31 Jan 2025

- 12 Minutes to read

- Print

- DarkLight

Attribution FAQ

- Updated on 31 Jan 2025

- 12 Minutes to read

- Print

- DarkLight

Frequently Asked Questions (FAQs) about Attribution

What are tracked installs and reported installs? Why do those two installs differ?

Show more

When using Tenjin, you’ll notice that there are two types of installs: Reported Installs and Tracked Installs.

Reported Installs are installs that the ad network claims to drive for each of your campaigns. Tracked Installs are installs that an unbiased 3rd party attributes to an ad network’s campaign, taking into account the other campaigns you’re running on other ad networks. When looking at Tracked Installs, you are looking at an install metric that is holistic - it’s based on all campaigns, even ones that you’re running on other ad networks. Reported Installs, on the other hand, is calculated in a vacuum by the ad network you’re running on - it’s only based on what that ad network sees.

So are Reported Installs supposed to be the same as Tracked Installs? Not always. The point of attribution ISN’T to make Tracked Installs = Reported Installs. In fact, sometimes attribution is supposed to do the exact opposite. The point of attribution is to take a holistic view of ALL the campaign activity on the various ad networks you’re using at the same time, and attribute users to the most appropriate campaign. Only an unbiased 3rd party can do this.

For Example:

You’re running campaigns on two ad networks at the same time, Applovin and Tiktok. You set the CPIs to $1.00 for each campaign on each ad network. A unique user clicks on your Applovin campaign, then later that same user clicks on your Tiktok campaign, then installs the app. What happens? How is everything tracked for that user?

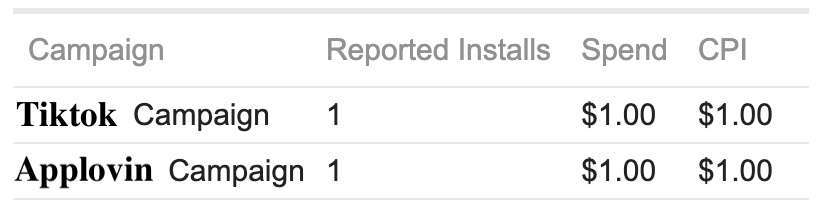

Since Applovin and Tiktok don’t talk to each other, there is no way for them to reconcile the unique user interacting with both ad campaigns on separate networks at the same time. As a result, the Reported Install count for Applovin would be “1” and the Reported Install count for Tiktok would be “1” also. Here’s what we have so far:

But this makes no sense! We only acquired a single unique user! A unique user should only be associated to one of the campaigns!

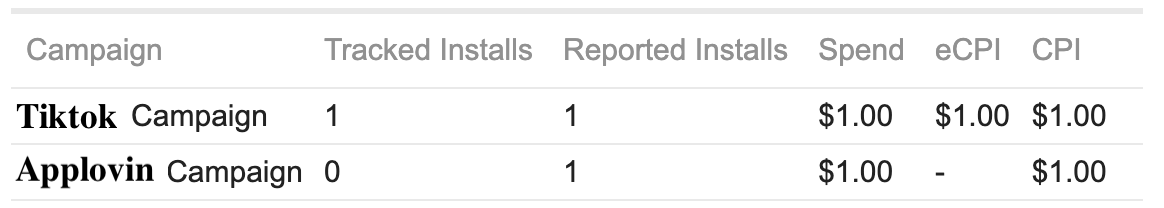

That’s what attribution solves. By using a 3rd party attribution provider like Tenjin, Tenjin will place the user into the Tiktok campaign as a “Tracked Install” (based on the last click in this case). This way, the unique user who clicked on Applovin and then Tiktok will be associated with Tiktok’s campaign and NOT Applovin’s. In this example the count for Tracked Installs and Reported Installs would look like this:

Now the unique user is in the appropriate ad network campaign for downstream analysis of your campaigns. If the user starts generating LTV, then you know what the effectiveness of your spend is. This is the only way you can measure your ROI on an apples-to-apples basis.

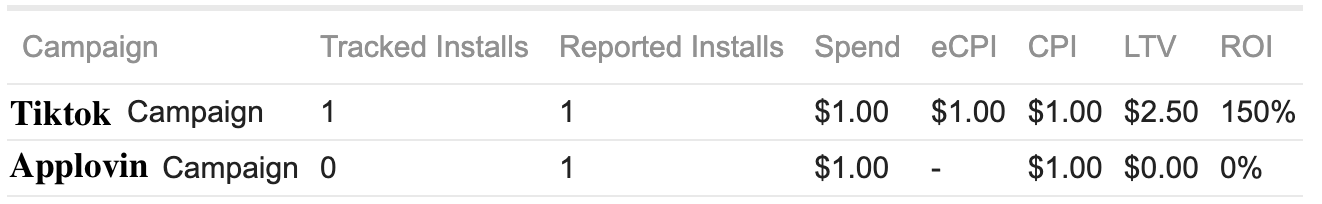

So let’s say the acquired user generates 90-day LTV of $2.5 Your analysis would show the following:

In this case ROI = (LTV/Spend -1).

This let’s you know your Google campaign is more effective than your Facebook campaign for that unique user.

Why are there discrepanices between tracked and reported installs?

Show more

Usually, up to a 30% discrepancy for SANs and 10% discrepancy for non-SANs is expected for the reasons below:

- Ad networks ignore 3rd party attribution technology - Meta, Twitter, Google and a few others rely on their own internal technology to let attribution partners know when an install should be awarded to their networks. When users for these networks overlap, the 3rd party technology can’t control if the ad network claims a Reported Install even when the attribution technology only picks one ad network to associate the download with.

- Install callbacks and click URLs are not set up properly - The most fixable of the problems is when click URLs and install callbacks are set up improperly by the advertiser. This can usually get fixed right away by double checking that the proper click URLs are getting used and the install callback in the attribution technology is functioning properly.

- Inaccurate methods for tracking clicks and installs are used with ad network SDKs - When an ad network does not support collection of an advertising ID (IDFA or GAID), then the 3rd party attribution technology will generally rely on probablistic attribution.

- Doubling up on ad network and attribution SDKs - Attribution systems send install callbacks to ad networks to notify an ad network of an install. If the app has a duplicate SDK that sends an ad network install callbacks, there can be double counting of Reported Installs.

- Ad networks use a different attribution window from ours (usually for SANs).

Why is Tenjin's retention rate different from retention in other tools?

Show more

“Retention” is a simple idea, but there are several details to consider when implementing it. By Default, at Tenjin, we calculate “classic” retention: an N-day retained user is one who returns on the Nth day after acquisition. The day a user returns may seem clear and simple, but there are actually 2 ways to interpret this: (1) using absolute time or (2) using relative time. As an example of absolute time, a user acquired on May 1st and returning on May 2nd is called a 1-day user. However, if that user was acquired at 23:59 May 1 and returned at 00:01 May 2, they really only waited 2 minutes to return.At Tenjin, by default, we use relative time. Each user has their own “lifetime”, as counted in days after acquisition time. Their “birth” is at acquisition on day 0, and day 1 begins 24 hours later. An N-day retained user is one who returns between 24N hours and 24(N+1) hours after acquisition

the N-day retention rate = unique N-day retained users / unique 0-day users

We believe using relative time puts all users on equal footing, no matter what time zone they may be in, or what their circadian rhythm might be. It also enables us to “normalize” our other lifetime metrics, such as cumulative revenue, cumulative ROI, and cost-per-retained user.

Tenjin now supports Retention Rate calculation using the UTC-based cohort strategy. With this approach, Day 1 begins at the first UTC midnight following each user’s install timestamp. This method helps align retention rate calculations with other platforms that use UTC-based timestamps, reducing discrepancies in metrics.

To enable this feature for your account, navigate to My Account → Manage User → Cohort Strategy and select the desired option.

Why is my x-day retention value still changing? When will it remain constant

Show more

As we saw above, under relative retention option, an N-day retained user is one who returns between 24N hours and 24(N+1) hours after acquisitionPerhaps the best way to understand this is to follow an example.

- Users belonging to the “Sept 1” cohort were acquired between 2015-09-01 00:00 and 2015-09-01 23:59.

- The latest “Sept 1” user (let’s call him Joe) could have been acquired at 2015-09-01 23:59.

- Joe’s 0th day lasts for 24 hours, ending at 2015-09-02 23:59.

- If Joe returns at anytime between 2015-09-02 23:59 and 2015-09-03 23:59, then he gets counted as a 1-day retained user.

- Therefore, the 1-day retention rate for the “Sept 1” cohort is not finalized until 2015-09-04 00:00.

In general, the x-day retention rate for a given cohort is not finalized until AFTER (x+2) FULL days after acquisition. Or in other words, on the (x+3)th day it will remain constant.

It may seem strange to wait 3 whole days for this metric to stabilize. Where do these days come from and how do we explain them? In the worst case, a user could be acquired at the end of the day (“Day #1”). His 0th day (initial 24-hour period) doesn’t count towards retention (“Day #2”). And in the worst case, he could return at the end of the 24-hour period of the x-th day (“Day #3”).

Why isn't Tenjin IAP revenue the same as revenue in iTunes connect or Google play console?

Show more

Tenjin collects IAP revenue directly from your SDK, whereas iTunes connect or Google play console shows their number directly through the store purchase. Based on our experience, those revenue could be different by up to 20%.

These are the possibilities if you see a large discrepancy.

- Tenjin’s revenue is net(after 30% Apple/Google play store cut)

- (Only for iOS) If you don’t validate receipts, Tenjin counts all revenue that gets passed through our SDK. But some revenue may be rejected by Apple. To avoid this, please make sure to use our receipt validation (iOS SDK)

- If you newly integrate our SDK and the app update is voluntary, some users won’t have Tenjin SDK yet. In that case you won’t see all revenue in Tenjin. This should go away as time goes by.

What is the difference between Revenue and LTV?

Show more

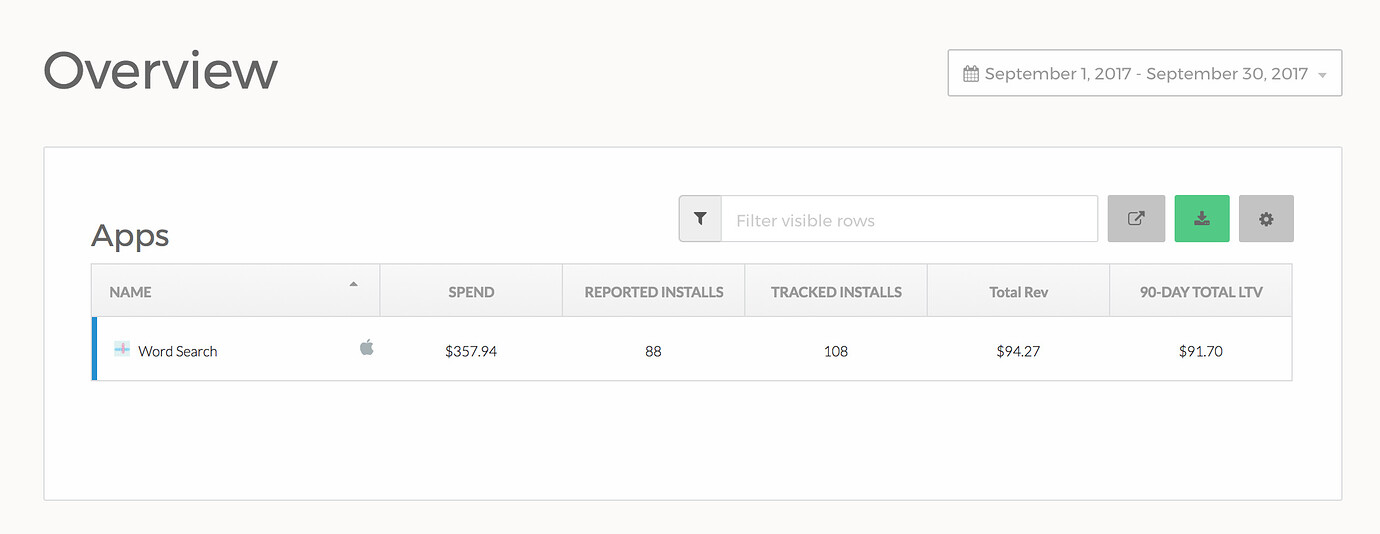

When you say “revenue” in general, there are two types of revenue - cohort or non-cohort. In Tenjin, we have a clear distinction between these two. “Revenue” is always a non-cohort value, and “LTV” is always a cohort value by definition. Let’s look at an example. Assume today is 10/20:

In this example,

- Total Rev = $94.27

- 90-day Total LTV = $91.70

This means the app generated $94.27 from all users (regardless of when those users were acquired) in September (non-cohort), and the app generated $91.70 in the lifetime from the users that were acquired in September (cohort). Revenue is usually useful when you manage daily cashflow and LTV is to measure your campaign ROI.

Please be aware of this difference when you compare Tenjin numbers with the numbers you see in other tools. We also separate out IAP revenue (or LTV) and ad revenue (or LTV), so you can see where your revenue comes from.

Why does the Revenue not equal the x-day LTV?

Show more

Revenue is the daily cashflow over the selected date range.

- given date range from Sept 1 to Sept 30, all users generated $94.27 in revenue for app activity (IAP or ad revenue) that occurred in that date range

x-day LTV is the revenue generated by users acquired (installing the app) during the selected date range, as the cumulative total for X days after installing the app.

- It is important to know what “today” is, in relation to the selected date range because you need to know how “old” the included users are. If today is Oct 20 and the given date range is Sept 1-30, your “oldest” users are 50 days old (Oct 20 minus Sept 1), and your “youngest” users are 20 days old. The 90-day LTV total of $91.70 is all the revenue generated by users in 30 cohorts (each day of September). Consequently, given this date range in the example, 50-day LTV, 51-day LTV …, 89-day LTV, and 90-day LTV would all be the same value, since your oldest users are only able to generate 50 days of revenue after the install.

Will Revenue ever equal x-day LTV?

Probably not. You would need ALL users in a single UTC day (00:00 to 23:59) to have EXACTLY the same install date. This is extremely unlikely.

Why is ROAS on the Tenjin dashboard different from the Applovin dashboard?

Show more

The assumption here is that your app is based on in-app ads.

- Currently, one of the main ways Tenjin allocates ad revenue is by using the sesion counts from SDK to calculate LTV or ROAS. This total must be somehow distributed and assigned to each user’s source channel. We use the proportion of app sessions generated by the user (on that day, in that app) to estimate how much ad revenue to assign to each source channel.

- For example,

- Data from publisher API:

- 4/20/2016

- Cool Game

- $20 ad revenue

- Data from Tenjin SDK:

- 4/20/2016

- Cool Game

- 30 Meta sessions

- 40 Google sessions

- 30 Twitter sessions

- Estimated ad revenue for each source channel:

- 4/20/2016

- Cool Game

- $6 Meta ad revenue

- $8 Google ad revenue

- $6 Twitter ad revenue

- Data from publisher API:

- Cohorted ad revenue is a bit more complicated but uses the same estimation method. The user’s acquisition day and n-th day of life are also taken into account when determining the proportion of app sessions.

- However, AppLovin uses impression-level ad revenue(ILRD) to calculate LTV or ROAS. In general, their ROAS is higher than Tenjin ROAS based on the allocation logic.

We now support Ad Mediation LTV and ROAS. You can use the Ad Mediation ROAS metric from the Tenjin dashboard which will be closer to your Applovin MAX ROAS. Details here

Discrepancy between Tiktok conversions and Tenjin tracked installs for iOS apps

Show more

- TikTok reports on SKAN installs and not on MMP installs for SKAN for iOS 14 campaigns as described here so our tracked_installs won't match exactly with reported_installs.

Please find the detailed differences between Tenjin and TikTok Attribution here

Why does DAU in Tenjin not match with Firebase?

Show more

Discrepancy between Tenjin DAU and Firebase DAU numbers are expected as the logic used for counting and tracking installs and DAU is different in both platforms. In Tenjin, we use the first app_open event as the install event and all subsequent app_open events are recorded for sessions. It is also recommended to always use the latest Tenjin SDK and initialize it on every OnResume. If you continue to see large data discrepancies, email us at support@tenjin.com with details to investigate further.Why is there a discrepancy between Tenjin 0D ROAS and Mintegral 0D ROAS?

Show more

A discrepancy between Tenjin 0D ROAS and Mintegral 0D ROAS is expected due to the different methodologies utilized for ROAS calculations between the two platforms.

- IAA ROAS:

Tenjin employs a session-based aggregation method to compute Ad revenue LTV and ROAS. Conversely, Mintegral used the ILRD-based revenue approach for its ROAS calculations. This distinction leads to an expected discrepancy of approximately 30%.

- IAP ROAS:

Tenjin calculates the LTV and ROAS based on the net IAP amount (after 30% or 15% app-store commission, contingent upon the store-cut you've configured in Tenjin). In contrast, Mintegral adopts the gross IAP amount for its ROAS calculations, resulting in a discrepancy of upto 30%.

Upon successful configuration and setup, you should be able to start ROAS campaigns with Mintegral, despite the expected discrepancies.

For further clarification or information, kindly reach out to us at support@tenjin.com.

(1).png)